This article is the fifth in a series covering how Uber’s mobile engineering team developed the newest version of our driver app, codenamed Carbon, a core component of our ridesharing business. Among other new features, the app lets our population of over three million driver-partners find fares, get directions, and track their earnings. We began designing the new app in conjunction with feedback from our driver-partners in 2017, and began rolling it out for production in September 2018.

Uber’s users depend on our apps as the primary tools to access our services. Building our new and improved driver app took a lot of collaborative design work and many developer hours. Launching this app quickly and seamlessly for our driver-partners around the world also took a lot of thoughtful planning; a positive and smooth launch experience was critical for ensuring that driver-partners could continue to rely on our platform, as well as maintaining the integrity of our business.

With the Android version of our new driver app, we couldn’t take the chance that its launch would negatively impact our users, so we took the unlikely path of shipping two apps, or binaries, in one package. While uncommon, this approach let us roll out the new app to a percentage of our driver-partners in specific cities as a beta, while maintaining others on the existing app.

We gained experience in this strategy when we launched the Android version of our new rider app in 2016, where we also shipped two apps in one package.

For the driver app to support a combined package of both our existing and beta apps, we had to make changes to our application class, launcher activities, receivers, and services so they could function in either standalone or combined modes. We also needed to add logic to switch between modes gracefully and at convenient times to make these changes as transparent as possible to our driver-partners, letting them continue their business unaffected.

This rollout strategy has been proving its worth as we make our new app available to our over three million driver-partners as seamlessly as possible.

Two apps in one

The idea to ship two versions of an app in one package was the result of a few different requirements. First of all, when we built our new rider app in 2016, the Uber platform had not yet transitioned to a monorepo, so we used two repositories, one for the existing app and one for the new version. By building our new app from scratch, we found that engineers were able to iterate quickly, design solutions in a clean space without any tech debt, and build the new app using a newly-adopted Buck build system. This approach also ensured that the new app did not accidentally leak into the older app’s binary, which might be decompiled by an enthusiast or competitor and give away the rewrite plans.

We built the new driver app in the monorepo where the old app lived, but waited until a late stage in development to merge the two apps into one package. Separating the two apps early on let us keep updating the old app while releasing a standalone beta version of the new app. The new beta app backed up our beta program in which we released it to a select set of drivers around the world, gathering valuable feedback. As our final launched approached, leveraging one package with two apps gave us more control over the release process.

Second, while the Google Play Store offers tools that let developers easily setup rollouts by percentage or even control the rollout in specific markets, we needed fine-grained control over this release, as the app needed to be adjusted for different environments and policies at the city level. Along with location, timing is very important, as we don’t want to initiate an update in the middle of a trip, causing the driver to lose app functionalities such as navigation and fare computation. We also perform thorough A/B tests on reimplemented or newly designed features to give us confidence in our product to run as designed. Having one app containing the old and new apps lets us build mechanisms to control the rollout for users, timing, and regions.

Finally, we needed to guarantee a safe rollout with a high level of confidence that the app will still function reliably under a variety of conditions. By shipping the older version of the app along with the major rewrite, we can adjust the variables for the rollout or fall back to an app that has a proven record of stability.

Combining the two apps

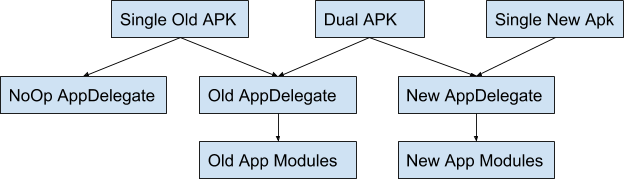

Packaging the new and old apps together, which we call Dual Binary, involves treating the new app as an Android library and adding it as a dependency of the old app’s application module. Before that happens, we first need to push down all the logic in each Application subclass into a class we called the AppDelegate. This allows each app’s application-level code to have a minimal surface area so that it can be easily integrated into whichever Application class is necessary.

public class DualBinaryApplication extends Application {

private AppDelegate appDelegate;

@Override

public void onCreate() {

if (BuildConfig.IS_DUAL_BINARY && shouldLaunchNewApp(this)) {

// single binary returns no-op AppDelegate

appDelegate = NewAppDelegateFactory.create(this);

} else {

appDelegate = OldAppDelegateFactory.create(this);

}

}

}

For our purposes, we had three different builds: the Dual Binary app, single binary old app, and single binary new app. When we wanted to build the Dual Binary app, we set a IS_DUAL Gradle property to true, which was read in the old app’s build.gradle. This property controlled whether or not the new app’s code was added as a compile dependency and also created and set BuildConfig.IS_DUAL accordingly via buildConfigField in the Android Gradle configuration. With both new and old AppDelegate classes available, we could insert logic into the old app’s Application subclass to control which AppDelegate is loaded when the Dual Binary app starts.

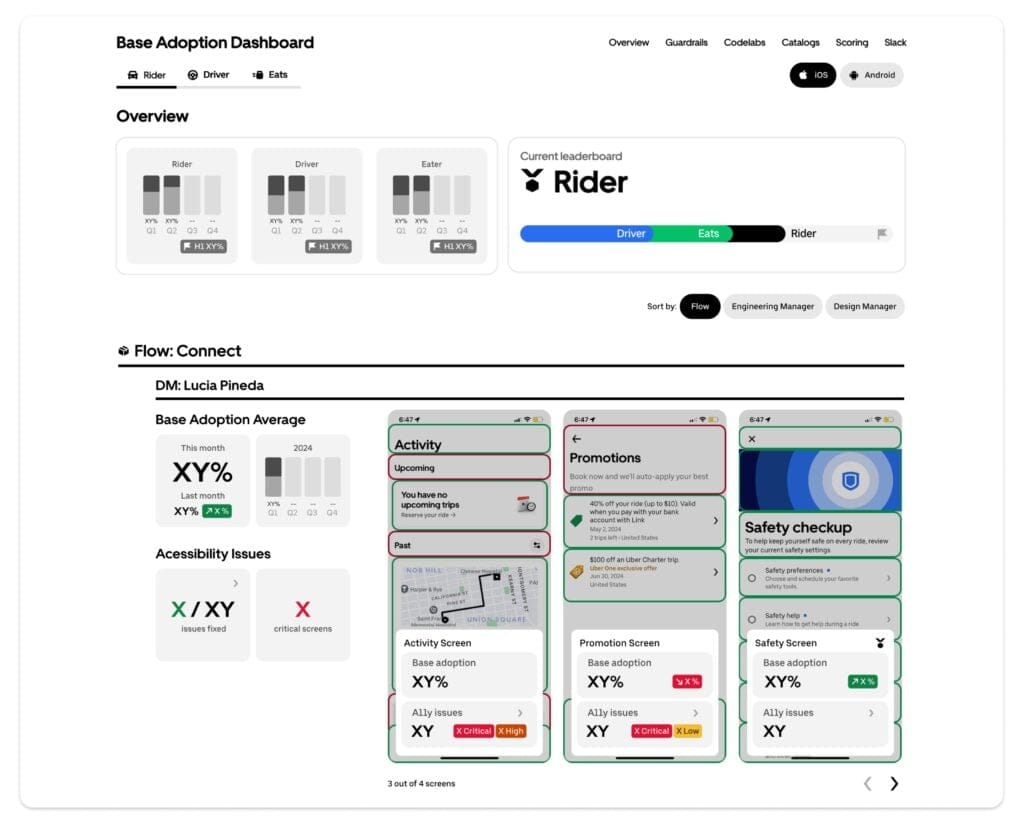

The single binary old app was still needed so that we could continue shipping it using the same weekly build schedule while we developed the new app. We added a no-op module as a dependency containing a NoOp AppDelegate, shown in Figure 1, above, which had zero dependencies and let us compile under our Dual Binary logic as we moved towards shipping the new app as a single binary. The single binary old app could then be built by setting the IS_DUAL Gradle property to false. The single binary new app was also necessary so that engineers could quickly build and iterate on the new product during the development phase. Creating the single binary new app involves hooking up the New AppDelegate to the NewApplication.

Similar to how we created a place to introduce switching logic for application-level code, we used a springboard activity to introduce activity-level code. When this activity was launched, it first checked with the Application subclass to see which AppDelegate was loaded, then continued on to forward the intent to the new or old app’s main activity and calls finish() to make the springboard go away before any UI is shown. The call to finish() configured the back stack so that the user was not awkwardly sent back to the old app when they press the back button.

When using a springboard activity, we needed to make sure that the intended activity intent flags were properly declared. Also, if someone started our app’s main entry point for a result, then we needed to override Activity#getCallingPackage() and Activity#getCallingActivity() to ensure that the springboard passed along the correct information so that the result could be returned to the appropriate caller.

The other entry points we needed to consider were receivers and services. If the component was not shared between the new and old apps, then the Application subclass would programmatically enable/disable it using PackageManager.setComponentEnabledSetting before loading the app delegate. If the component was shared between the new and old apps, such as a receiver in a push notification library module, then the component needed to check with the Application subclass to see which AppDelegate was loaded before bridging to the new or old app’s handling of the push notification.

When merging the new app into the old app, there were additional code changes we had to consider to make the rollout successful and seamless. These are questions any engineer considering a similar rollout approach as ours might want to ask:

- Did the new app’s build.gradle have any config that needed to be ported to the old one?

- Are all relevant AndroidManifest.xml declarations and settings merged in properly?

- Did the dual binary app declare the union of all permissions and features?

- Were we migrating any user/auth data from the old app’s storage to the new app?

- Did the old and new apps have any XML resources with the same names? If so, then we might get unpredictable results in our UI. To easily avoid this issue, we made sure to use resource prefixes.

- If we didn’t have a monorepo, which was the case when we launched our rider app, then we would need to come up with a versioning scheme for the new app’s artifacts and ensure that versions of the old app are compatible with the appropriate artifacts.

Rolling out the new app

The Dual Binary app had many mechanisms built into it to guarantee a controlled and safe rollout, such as opt-in feature flags, client side bucketing, experiment kill switch, and crash recovery.

private boolean shouldLaunchNewApp(Context context) {

rolloutPrefs = new RolloutPreferences(context);

if (rolloutPrefs.isCrashRecoveryForceOldApp()

|| rolloutPrefs.isKillSwitchForceOldApp()) {

return false;

} else if (rolloutPrefs.isNewAppFeatureEnabled()) {

return true;

} else {

String deviceId = DeviceUtils.getDeviceId(context);

int rolloutPercentForRegion = getNewAppRolloutPercent(context);

return clientSideBucket(deviceId, newAppRolloutPercentForRegion);

}

}

We couldn’t use our server-driven feature flagging system directly since that would require initializing an AppDelegate, conflicting with the decision that is trying to be made on app launch. Instead, we opted to follow a second session approach where the active AppDelegate listens to the server value for the LAUNCH_NEW_APP flag and caches the result in a SharedPreference outside of the typical experiment storage. When the Dual Binary app was launched, it then read the SharedPreference value and launched the appropriate AppDelegate.

The downside to the feature flag approach was that a change to the server value of the flag would require a second launch of the app before any updates were reflected in the app UI. This means that we were unable to test the new app’s effect on new sign-ups and log-ins. To counteract this, we implemented client-side bucketing, deciding the value of the feature flag locally on the device. If the value of the LAUNCH_NEW_APP SharedPreference was false, the device would generate a random number between 0 and 100 that would remain constant based on that device’s information. If that generated number was lower than the hard-coded, per-build rollout, then the NewAppDelegate would be launched. This strategy provided a safe, gradual rollout that still enabled validation of the sign-up flow.

In the event that something was awry with the new app mode of the Dual Binary or there was a problem with client-side bucketing, we implemented an additional FORCE_OLD_APP feature flag. The value that the app received from the server was cached to SharedPreferences, just as with the flag outlined above.

Since our new app uses server-driven feature flags, it was important that an app running in the new mode could still successfully receive values from the server. In order to protect that, we added a feature called Crash Recovery. This lightweight, minimal-dependency system tracks signals, such as the number of app launches, number of network responses from the feature flag server, and app lifecycle. In the event that the NewAppDelegate tried to load, but never got far enough through the launch sequence to receive a feature flag payload, the system would execute an increasingly stronger series of recovery actions. After a single failed launch, the system cleared storage caches. After another sequential failed launch, the system cleared local experiment flag values (except for a handful of whitelisted flags such as the LAUNCH_NEW_APP flag). If the app tried to launch the NewAppDelegate a third time and failed to receive experiments in a reasonable amount of time, then the Dual Binary app was reverted back into its old app mode until the next app update, ensuring that the driver had a working app so they could continue accepting rides.

These various mechanisms helped stabilize the code in the old and new modes of the app, but the Dual Binary control logic was the first code executed on app launch, so would need to be just as stable. Before performing the actual rollout, we tested each of the mechanisms in the wild by simulating a rollout and using analytics to confirm that the proper amount of users were in the appropriate mode of the Dual Binary app. This testing greatly increased our confidence in the Dual Binary control logic.

Lessons learned

When it was time to enable the app’s new mode, we ran into a few issues that proved the value of our Dual Binary approach to app development. In one such incident, our data scientists discovered a drop in business metrics for the new app mode’s performance in one region. The ability to adjust rollout percentages in a more fine-grained manner let us continue the rollout elsewhere, while engineers remedied the regional issue, which turned out to be a missing payment flow. Without Dual Binary, we would have had to pause the entire build train for weeks to months while research and development was done to resolve this issue, thereby blocking the growth of other features in addition to necessary bug fixes.

We also learned that it is crucial to have a server-driven fall back flag on the last level of the rollout control mechanism. We used to test client side bucketing by enabling the new driver app for a list of 500 hard-coded device IDs. However, because device IDs are not unique to a single device, the new app was released to a much larger group of users than we expected, causing some regions to access the new app before we intended to roll it out. Since the new app was not yet stabilized for these markets, we forced these regions back to the old app by changing the FORCE_OLD_APP flag from the server. If we hadn’t of been able to revert to the old app in these markets, we would have had to cut a hotfix build to mitigate this issue.

Our Dual Binary approach may be more complicated than simply updating users wholesale from one app to another, but it has proven its worth in supporting our driver-partners through a seamless experience. The Dual Binary let us take a careful, measured approach in delivering the new app, while providing a safety net just in case the rollout did not proceed as planned.

Index of articles in Uber driver app series

- Why We Decided to Rewrite Uber’s Driver App

- Architecting Uber’s New Driver App in RIBs

- How Uber’s New Driver App Overcomes Network Lag

- Scaling Cash Payments in Uber Eats

- How to Ship an App Rewrite Without Risking Your Entire Business

- Building a Scalable and Reliable Map Interface for Drivers

- Engineering Uber Beacon: Matching Riders and Drivers in 24-bit RGB Colors

- Architecting a Safe, Scalable, and Server-Driven Platform for Driver Preferences

- Building a Real-time Earnings Tracker into Uber’s New Driver App

- Activity/Service as a Dependency: Rethinking Android Architecture in Uber’s New Driver App

James Barr

James Barr is a software engineer on Uber’s Mobile Platform Frameworks team.

Zeyu Li

Software engineer Zeyu Li previously worked on Uber’s Driver Platform team, and is now on the Delivery-Partner Trip Experience team.

Posted by James Barr, Zeyu Li

Related articles

Most popular

Uber’s Journey to Ray on Kubernetes: Ray Setup

Case study: how Wellington County enhances mobility options for rural townships

Uber’s Journey to Ray on Kubernetes: Resource Management