SBNet: Leveraging Activation Block Sparsity for Speeding up Convolutional Neural Networks

January 16, 2018 / Global

By applying convolutional neural networks (CNNs) and other deep learning techniques, researchers at Uber ATG Toronto are committed to developing technologies that power safer and more reliable transportation solutions.

CNNs are widely used for analyzing visual imagery and data from LiDAR sensors. In autonomous driving, CNNs allow self-driving vehicles to see other cars and pedestrians, determine their exact locations, and solve many other difficult problems that could not previously be tackled with conventional algorithms. To ensure that our autonomous systems are reliable, such applications of CNNs must run at extremely fast speeds on GPUs. Developing efficient ways to improve response time and accuracy while reducing device costs and power consumption with CNNs is an ongoing research priority.

As part of this effort, we developed Sparse Blocks Network (SBNet), an open source algorithm for TensorFlow that speeds up inference by exploiting sparsity in CNN activations. Using SBNet, we show that a speedup of up to one order of magnitude is possible when combined with the residual network (ResNet) architecture for autonomous driving. SBNet allows for real-time inference with deeper and wider network configurations, yielding accuracy gains within a reduced computational budget.

In this article, we discuss how we built SBNet and showcase a practical application of the algorithm in our 3D LiDAR object detector for autonomous driving that resulted in significant wall clock speedups and improved detection accuracy.

Background

Conventional deep CNNs apply convolution operators uniformly for all spatial locations across hundreds of layers, requiring trillions of operations per second. In our latest research, we build on the knowledge that many of these operations are wasted on overanalyzing irrelevant information. In a typical scene, only a small percentage of observed data is important; we refer to this phenomenon as sparsity. In nature, a biological neural network such as the visual cortex exploits sparsity by focusing foveal vision based on movement detected in the peripheral vision and reducing receptor density and color information in the peripheral portions of the retina.

In the context of artificial neural networks, activation sparse CNNs have previously been explored on small-scale tasks such as handwriting recognition, but did not yet yield a practical speedup when compared to highly optimized dense convolution implementations.

However, our research shows that a practical speedup of up to an order of magnitude can be achieved by leveraging what we refer to as block sparsity in CNN activations, as shown in Figure 1, below:

Introducing: SBNet

With these insights, we developed SBNet, an open source algorithm for TensorFlow that exploits sparsity in the activations of CNNs, thereby significantly speeding up inference.

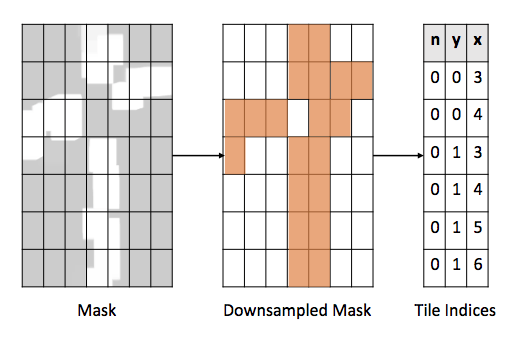

For the purposes of our algorithm, we define block sparsity from a mask representing the locations where the activations are non-zero. This mask can come from a priori knowledge of the problem, or simply from thresholding averaged activations. In order to exploit highly optimized dense convolutional operators, we define two operations that transform the sparse activations into a smaller feature map containing only non-zero elements.

SBNet accomplishes this by first performing a pooling operation on the attention mask using overlapping blocks from the input tensor and converting it to a list of indices passed to a block-gather operation, as displayed in Figure 2, below:

The gather operation then takes the tiles and stacks them together along the batch dimension into a new tensor. Existing optimized implementations of dense convolutions are then used and a customized scatter operation then performs an inverse operation writing the results on top of the original dense input tensor. Figure 3, below, shows our proposed sparse convolution mechanism using a sparse gather/scatter operation:

When we designed the sparse operation APIs for SBNet, we wanted to make it easy to integrate into popular CNN architectures, such as ResNet and Inception, and other customized CNN building blocks. To accomplish this, we released CUDA implementations and TensorFlow wrappers for the three basic operations that we introduced: reduce_mask, sparse_gather, and sparse_scatter. Using these low-level operations, it is possible to add block sparsity to different CNN architectures and configurations.

Below, we provide a TensorFlow sample demonstrating how to sparsify a single-layer convolution operation using SBNet primitives:

More examples can be found on our GitHub repo including a full implementation of ResNet blocks.

Next, we discuss how we apply SBNet to train 3D vehicle detection systems at Uber ATG.

Application: 3D vehicle detection from LiDAR points

At Uber ATG Toronto, we validate SBNet on the task of 3D vehicle detection from LiDAR points, an effective use case because of its sparse inputs and demanding time constraints for inference. In our model, the LiDAR produces a 3D point cloud of the surrounding environment at a rate of 10 radial sweeps per second. For each sweep, we manually annotate 3D bounding boxes for all surrounding vehicles. In addition to the point cloud and 3D labels, we also have the road layout information extracted from the map.

A bird’s eye view of the data, vehicle labels, and road map are shown in Figure 4, below:

First, we apply a CNN-based approach to solve this task and discretize the LiDAR point cloud with a resolution of 0.1m per pixel from an overhead view; as a result, data representation exhibits over 95 percent sparsity. Then, the data is fed into a ResNet-based single-shot detector. (For more on our baseline detector, please refer to our research.)

We benchmark two variants of an SBNet against a baseline detector that uses conventional dense convolution, replacing all layers with corresponding block sparse versions. These variants are based on two different sources of sparsity information: one uses a precomputed road map (known in advance) and the other uses a predicted foreground mask. The road map can be extracted from an offline map, which does not add computation time to the detector. The predicted foreground mask is generated using an additional low-resolution CNN, and yields higher sparsity than the road map.

When leveraging SBNet, we measured significant speedups in both variants versus a baseline detector. In Figure 5, below, we plot the measured speedup against level of sparsity in the input data:

The road map variant averaged a sparsity of 80 percent, with a corresponding 2x+ speedup, while the predicted mask variant averaged around 90 percent sparsity and a corresponding 3x speedup

In terms of detection accuracy, retraining the detector with SBNet architecture resulted in a 2 percentage point gain in average precision. This suggests that leveraging data sparsity stabilizes model training by reducing noise and variance, leading to more accurate 3D vehicle detection in addition to faster inference time.

Next steps

We believe SBNet is broadly applicable across a variety of deep learning architectures, models, applications, and sources of sparsity and we are excited to see the different ways the deep learning research community will utilize these architectural building blocks. For a more detailed explanation of SBNet and our research, we encourage you to read our white paper.

For a summary of this recent work, check out the video, below:

Uber is expanding its Advanced Technologies Group in Toronto, Canada focusing on AI research and development around perception, prediction, motion planning, localization and mapping. If developing novel computer vision and machine learning algorithms for autonomous driving interests you, consider applying for a role on our team!

Mengye Ren, Andrei Pokrovsky, and Bin Yang are researchers/engineers at Uber Advanced Technologies Group Toronto. Raquel Urtasun is the Head of Uber ATG Toronto, as well as an Associate Professor in the Department of Computer Science at the University of Toronto and a co-founder of the Vector Institute for AI.

For more on how Uber is tackling the future of ML & AI, check out some of our other Eng Blog articles, below:

Further readings

- M. Ren, A. Pokrovsky, B. Yang, R. Urtasun, “SBNet: Sparse Blocks Network for Fast Inference,” arXiv preprint arXiv:1801.02108, 2018. (GitHub)

- Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, “Gradient-based learning applied to document recognition,” in Proceedings of the IEEE, 86 (11): 2278–2324, doi:10.1109/5.726791, 1998.

- Abadi et al, “TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems,” arXiv preprint arXiv:1603.04467, 2016.

- B. Graham and L. van der Maaten, “Submanifold sparse convolutional networks,” arXiv preprint, arXiv:1706.01307, 2017.

- K. He, X. Zhang, S. Ren, J. Sun, “Deep residual learning for image recognition,” in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2017.

- A. Lavin and S. Gray, “Fast algorithms for convolutional neural networks,” in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2016.

Mengye Ren

Mengye Ren is a research scientist at Uber ATG Toronto. He is also a PhD student in the machine learning group of the Department of Computer Science at the University of Toronto. He studied Engineering Science in his undergrad at the University of Toronto. His research interests are machine learning, neural networks, and computer vision. He is originally from Shanghai, China.

Bin Yang

Bin Yang is a research scientist at Uber ATG Toronto. He's also a PhD student at University of Toronto, supervised by Prof. Raquel Urtasun. His research interest lies in computer vision and deep learning, with a focus on 3D perception in autonomous driving scenario.

Raquel Urtasun

Raquel Urtasun is the Chief Scientist for Uber ATG and the Head of Uber ATG Toronto. She is also a Professor at the University of Toronto, a Canada Research Chair in Machine Learning and Computer Vision and a co-founder of the Vector Institute for AI. She is a recipient of an NSERC EWR Steacie Award, an NVIDIA Pioneers of AI Award, a Ministry of Education and Innovation Early Researcher Award, three Google Faculty Research Awards, an Amazon Faculty Research Award, a Connaught New Researcher Award, a Fallona Family Research Award and two Best Paper Runner up Prize awarded CVPR in 2013 and 2017. She was also named Chatelaine 2018 Woman of the year, and 2018 Toronto’s top influencers by Adweek magazine

Posted by Mengye Ren, Andrei Pokrovsky, Bin Yang, Raquel Urtasun