How Trip Inferences and Machine Learning Optimize Delivery Times on Uber Eats

15 June 2018 / Global

In Uber’s ride-hailing business, a driver picks up a user from a curbside or other location, and then drops them off at their destination, completing a trip. Uber Eats, our food delivery service, faces a more complex trip model. When a user requests a food order in the app, the specified restaurant begins preparing the order. When that order is ready, we dispatch a delivery-partner to pick it up and bring it to the eater.

Modeling the real world logistics that go into an Uber Eats trip is a complex problem. With incomplete information, small decisions can make or break the experience for our delivery-partners and eaters. One of the primary issues we try to optimize is when our business logic dispatches a delivery-partner to pick up an order. If the dispatch is too early, the delivery-partner waits while the food is being prepared. If the dispatch is too late, the food may not be as fresh as it could be, and it arrives late to the eater.

Due to the noisy nature of GPS, tracing the signal from delivery-partners’ phones proved inadequate for the task of determining the best dispatch time. However, leveraging motion sensor data from phones gives us a detailed picture of when a delivery-partner is on the road, walking, or stuck waiting. Using this data, we created our Uber Eats Trip State Model, letting us segment out each stage of a trip. Further, this model lets us collect and use historical data for individual restaurants so we can optimize delivery times for both our delivery-partners and eaters.

Improving time efficiency for delivery-partners and eaters

The decisions that our business logic makes on when to dispatch delivery-partners has a significant impact on the number of trips that a delivery-partner can complete, ultimately affecting their earning potential. The GPS signal from delivery-partners’ phones serves as a primary signal to help us understand what is happening on a trip. However, GPS data is noisy in urban environments and not sufficient to understand precise interactions between delivery-partners and restaurants. In order to obtain concrete information on the state of a delivery, location data and motion data need to work in tandem.

With this traditional approach, as shown in Figure 1, we are able to see when a delivery-partner has:

- been dispatched

- arrived at the restaurant

- is en route to the drop-off location

- arrived at the drop-off location and completed the trip

However, it is often unclear from GPS data alone why the delivery-partner spends so much time in or around the restaurant before they pick up the the eater’s order. Given its noisiness, GPS data is too unreliable for our needs. To deliver a truly magical experience, we need to know if the delivery-partner spent this time looking for parking or waiting for the food to be prepared.

We listened to feedback from delivery-partners about pain points they have experienced because of these types of inefficiencies. For example:

- “There are a few flaws in the process of pick[up] and delivery. The pickup is sometimes not ready at all… sometimes even 20 minutes.”

- “I like [how] everything is streamlined, compared to other platforms, when you have to wait a lot of time [it] takes away from other trips.”

As a result of this feedback, we set out to develop a solution to address delivery-partner concerns. In particular, we looked at:

- Which restaurants have the longest parking times and why?

- How long does it take for the delivery-partner to walk to the restaurant?

- Does a restaurant have a difficult pickup process for delivery-partners?

- When should we dispatch the delivery-partner? Does the delivery-partner have to wait for the food? Is the food waiting for the delivery-partner?

- Did the delivery-partner have trouble with a delivery?

Sensor processing

As we highlighted above, GPS can be extremely noisy and inaccurate, making it insufficient as the only basis for our Trip State Model. However we can augment our input sensor richness with additional data sources, namely motion data and activity recognition (AR). Motion data comes primarily from accelerometers and gyroscopes in phones. The developer-friendly Android activity recognition API provides eight activity labels, shown below in Figure 2:

With this sensor landscape in mind, our goal was to build a reliable sensor collection and machine learning architecture to feed our Trip State Model for Uber Eats.

Figure 3, below, visualizes the data flow starting from raw data collection on the phone to processing it as part of a batch pipeline. The inference steps use machine learning (ML) models aimed at improving the product experience for the delivery-partner and eater by accurately calculating delivery times.

Mobile collection

In addition to GPS and motion data, we use the ActivityRecognitionClient API on the Android operating system to collect activity recognition data. Each activity received by the API comes with a confidence score between 0 and 100 that represents the system’s accuracy of that activity occurring. Android obtains its activity predictions through a combination of inertial measurement unit motion data and the phone’s step detection sensors. Uber only collects this data when delivery-partners are online, either on an Uber Eats delivery trip or giving a ride.

Machine learning

To feed this consolidated trip-level data into the Trip State Model, we assembled a dataset of labeled data, as follows:

(restaurant1, driver1, timestamp1, parked)

(restaurant1, driver1, timestamp2, waiting_at_restaurant)

(restaurant1, driver1, timestamp3, walking_to_car)

(restaurant1, driver1, timestamp4, enroute_to_eater)

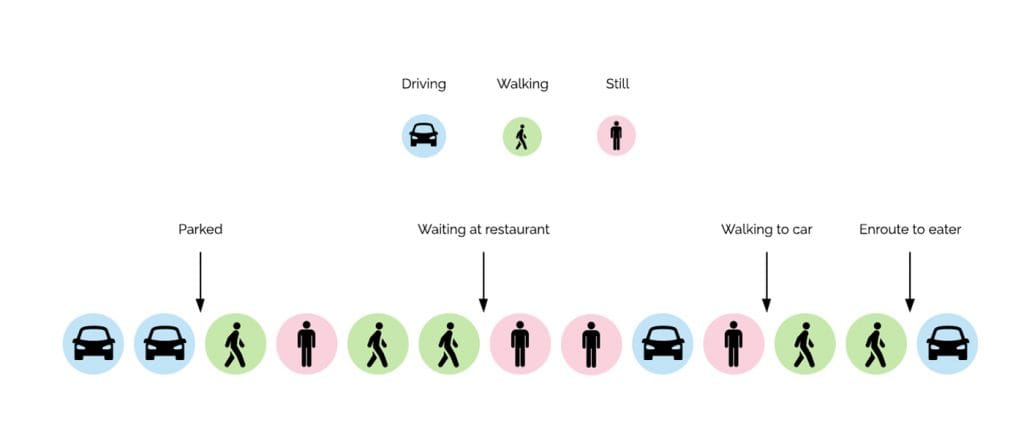

As Figure 4 shows, below, our sequence models are capable of finding the delivery-partner change-points amongst a sequence of state observations. These observations consist of activities or activities fused with other modalities, such as GPS and motion sensors.

Finding these change points is challenging for the following reasons:

- Noisy observations: Even though Android-based activities report their confidences, the Android-based classifier is noisy and separation boundaries between changepoints are not very clean (shown in figure 4, above).

- Ground truth data: In order to train high-performing sequence models, we need sufficient ground truth data from restaurants. However, restaurants show very different delivery-partner patterns and behaviors. We cannot obtain the admittedly sparse data for each restaurant in a scalable fashion.

Conditional random fields

In our case, a Trip State Model is a sequential model capable of inferring a sequence of discrete states from a sequence of noisy observations. These inferred discrete states are temporally related, i.e., we can impose order in the states and only a finite set of state transitions are possible. For example, a delivery-partner can wait at the restaurant only after they have parked the vehicle in the parking lot. Additionally, the current state plays an important role in modeling the inferred states. For example, if the delivery-partner is currently walking, they will very likely keep walking in the next timestamp.

We use conditional random field (CRF), a discriminative, undirected probabilistic graphical model, to approximate such functions. We have enhanced conditional random fields with kernels to capture non-linear relationships in the data. Modeling the problem as a CRF allows the flexibility to integrate signals from multiple sources, including activities from the device and proximity to restaurants. Other viable approaches are Hidden Markov models and recurrent neural networks.

Trip State Model

Aggregating GPS data and using sensor data from Android phones let us create a detailed Trip State Model for Uber Eats trips. This model gives us an in-depth look at how an Uber Eats trip proceeds, and gives us the opportunity to fine-tune the parameters we control, such as dispatch time, to ensure the best experience for not only our delivery-partners, but also our restaurant-partners and eaters. Figure 5, below, shows the additional detail achieved in our Uber Eats Trip State Model.

By aggregating time series sensor data, the model infers the likelihood that the delivery-partner is in one of the following states for each point in time (i.e. trip inferences):

- Arrived at restaurant: the delivery-partner has arrived at the restaurant and may be circling the block to find a parking spot. The total duration of this state can be used to adjust dispatch or power preferential dispatch, e.g. dispatching bikes to restaurants with long parking times.

- Parked: the delivery-partner has parked and is now walking and locating the pickup spot at the restaurant. During this state, the GPS location of the phone can help us ensure the accuracy of our location data for the restaurant.

- Waiting at restaurant: the delivery-partner has found the designated pick-up spot and is waiting for the food to be prepared. Analyzing the duration of this state helps improve dispatch algorithms and estimated time of delivery (ETD).

- Walking to car: the delivery-partner has picked up the food and is heading back to their vehicle.

- En route to eater: the delivery-partner is back in their vehicle, en route to delivering the food to the eater.

With this model, we can better understand what’s happening in the real world, optimize dispatch to improve food freshness, and work with restaurants to reduce delivery-partner waiting and parking time. Overall, this move from heuristics to models allows us to greatly improve operational efficiency.

Minimizing wait times

When we dispatch a delivery-partner to a restaurant, we can use the detailed historic breakdown of trip states (as shown in Figure 6) to ensure they arrive just when the food is ready. This dispatch method minimizes the wait time for the delivery-partner at the restaurant and, ultimately, helps them complete more trips. For the eater, we can offer better ETDs and ensure that the food is delivered as soon as possible after it is prepared—resulting in a fresher meal and shorter wait time.

Additionally, we are also learning more about the parking time for each restaurant, which will also allow us to improve dispatch efficiency. This system optimizes pickups based on time of day, traffic, and the restaurant. At the scale of our Uber Eats business, these improvements can have a very profound impact on improving the delivery-partner trip experience.

Next steps

Efficient data processing encompasses scalability, architecture robustness, and reliability, as well as modeling and machine learning challenges. The approaches discussed above showcase early steps of moving from heuristics to models as a company.

In the future, we’d like to extend our existing algorithm and build a real-time implementation of our Trip State Model. This system can unlock new interfaces that dynamically guide delivery-partners based on the task at hand. For example, when a driver is looking for parking, we can provide helpful guidance on where to park.

Refining the individual states of the Uber Eats trip model will significantly improve the experience for our restaurants, delivery-partners, and eaters through faster and more seamless food deliveries.

If you’re interested in machine learning and signal processing, distributed systems, or building delightful products, take a look at Uber’s job openings in the Sensing, Inference & Research team.

Subscribe to our newsletter to keep up with the latest innovations from Uber Engineering.

Ryan Waliany

Ryan Waliany is a Product Manager on Uber’s Sensing, Inference & Research (SIR) team.

Lei Kang

Lei Kang is a Data Scientist on the Uber Eats Marketplace Prediction team.

Ernel Murati

Ernel Murati is a Mobile Engineer on Uber’s Sensing, Inference & Research (SIR) team.

Mohammad Shafkat Amin

Mohammad Shafkat Amin is a Software Engineer on Uber’s Sensing, Inference & Research (SIR) team.

Posted by Ryan Waliany, Lei Kang, Ernel Murati, Mohammad Shafkat Amin, Nikolaus Volk