One Stone, Three Birds: Finer-Grained Encryption @ Apache Parquet™

10 March 2022 / Global

Overview

Data access restrictions, retention, and encryption at rest are fundamental security controls. This blog explains how we have built and utilized open-sourced Apache Parquet™’s finer-grained encryption feature to support all 3 controls in a unified way. In particular, we will focus on the technical challenges of designing and applying encryption in a secure, reliable, and efficient manner. We will also share our experiences with recommended practices to manage the system in production and at scale.

Standard Security Controls

This section describes the detailed requirements of standard security controls (i.e., access restriction, retention, and encryption-at-rest) to provide context for the succeeding technical details.

- Finer-grained access control: We can apply data access control in different levels: DB/table, column, row, and cell. The most general approach is the table-level specifying whether someone has access to the whole table or not at all. However, in practice, there may be only a handful of columns in a table that need to be access restricted based on your data classification specifications; the rest are okay to use for everyone. Even among the columns needing access control, there may need to be different levels of access limitations. Applying the coarse-grained access restriction such as table-level would preclude many legitimate use-cases or inspire to relax the rules. Both are either non-productive or risky. Column-level access control (CLAC) addresses this issue by allowing access control at a finer-grain (column-level). We strive to provide column-level access control that includes both higher-level and recursive columns.

- Tag-driven access policy: The category/tag of a column—instead of the column’s name should decide who can access what columns. In practice, data owners would assign a predefined tag to a column that will trigger a predefined set of access policies.

- Finer-grained retention: A general retention policy may require the deletion of certain categories of data after X days. It may not necessarily say the whole table or a partition be removed after X days. In this effort, we address this issue through column-specific deletion based on the tag after X days. In other words, delete only what is required by the policy while keeping other data available for consumption.

- Encryption at rest: Data encryption is well-established security control. This project attempts to encrypt only certain data fields instead of encrypting all data elements.

Challenges

It is not a common practice to apply encryption to achieve access control, retention, and encrypt-at-rest at the same time. We are striving to adopt this novel and unified approach to realize these critical security controls. However, we have to resolve the following real challenges before making them a reality:

Multiple access routes: As an open platform, Apache Hadoop®-based big data supports different data access routes, formats, and APIs. The access medium includes SQL (Apache Hive®, Presto®), programmatic (Apache Spark™, Apache Flink®), and direct (CLI, REST). Although we decided to enforce at the lowest possible layer (i.e., Parquet library), different projects might use different Parquet versions. Even some systems (i.e., Presto) utilize a custom Parquet library that may not align fully with the open-source version. Additionally, while most accesses depend on Hive metastore (HMS), a few accesses don’t go through HMS, making it harder to apply some policy consistently.

Performance: While core encryption and decryption libraries have become very fast due to recent hardware-level acceleration (i.e., Intel® AES-NI) instructions, the question about read-write overhead still has some relevance. The performance penalty could come from key accesses, encryption decisions such as block vs. single value, 256 vs. 128 bit, etc. At the Uber scale, where a user query could potentially scan billions of records, a tiny amount of overhead could halt the execution.

Handling access denied (hard vs. soft): In a situation, for example, where a user doesn’t have access to only one column, how should the system behave at the Parquet level? The ideal solution is to throw an exception or error out of the query. However, in reality, the user might be okay to get a masked value (i.e., null) as the column value since she does not care about the sensitive column. At the same time, most of the queries run with the wild card (“SELECT * ..”) as projection. In this case, explicitly selecting a long list of columns (skipping only one sensitive column) is time-consuming and not convenient for the user. More importantly, there are a lot of queries running regularly through pipelines for years where no active developers are available. Hard failure will instantly cause thousands of pipelines to fail, creating an operational nightmare with immediate business impact. At the same time, silently returning a masked value could cause an unintended business decision. As part of this initiative, we will need to address these conflicting scenarios acceptably.

Reliability: Since this process will modify hundreds of PB of old and new data, the possibility of data loss is high. For instance, if we lose the key, all associated encrypted data will be undecipherable. Moreover, Parquet encryption will be in the critical path of all data accesses; a simple bug could cause business-hindering outages. In particular, key management through KMS (Key Management Service) introduces challenges to maintaining the reliability of this critical and core service.

Historical data: Often, a sizable amount of historical data is stored in the production system. The safe rewrite of historical data demands a careful orchestration and execution of the plan with substantial computing resources. This problem gets multiplied due to frequent updates of column tags. Any modifications to the tagging system would necessitate the expansive backfills of PBs of data.

Multiple data formats: The recommended solution relies on Parquet data format. However, it is difficult to enforce a single data format in an open platform like Hadoop, where users can choose any data format. As a result, we have a few data formats in production at Uber, making it harder for a mandate to converge to Parquet. Translating other data formats into Parquet is a considerable effort with potential service interruptions involving multiple parties.

Schema tagging and adjustment: CLAC is based on column tags. We need a proper metadata tagging system for the management and propagation of tags into the Parquet level. More importantly, tags are not static. For example, a column might be initially tagged as one type of data. Then a few months later, it may be reclassified and labeled differently. The CLAC system will need to backfill the complete dataset (sometimes PB is size) to comply with the latest tagging within a reasonable time.

Apache Parquet™ Modular Encryption

Parquet column encryption, also called modular encryption, was introduced to Apache Parquet™ and released in Parquet™ 1.12.0 by Gidon Gershinsky. A Parquet™ file can be protected by the modular encryption mechanism that encrypts and authenticates the file data and metadata, while allowing for regular Parquet functionality (columnar projection, predicate pushdown, encoding, and compression). The mechanism also enables column access control, via support for encryption of different columns with different keys.

Currently, Parquet™ supports 2 encryption algorithms: AES-GCM and AES-CTR. AES-GCM is an authenticated encryption algorithm that can prevent unauthenticated writing. Besides the data confidentiality (encryption), it supports 2 levels of integrity verification/authentication: of the data (default), and of the data combined with an optional additional authenticated data (AAD), which is a free text to be signed, together with the data. However, the AAD is required to be stored separately, in a KV store as an example, from the file itself, while the AAD metadata/index is saved in the Parquet™ file itself. The decryption application reads the AAD metadata/index first from the Parquet™ file and then reads AAD from the KV store before it can decrypt the Parquet™-encrypted data. More details about AAD can be found in RFC Using AES-CCM and AES-GCM Authenticated Encryption.

AES-CTR doesn’t require integrity verification/authentication. As a result, it is faster than AES-GCM. The benchmarking result shows AES-CTR is 3 times faster than AES-GCM in single-thread applications in Java 9, or 4.5 times faster than AES-GCM in single-thread applications in Java 8.

A Unified Approach

Apache Parquet™ finer-grained encryption can encrypt data in different modules as discussed above including columns in files, and each column can be encrypted independently (i.e. using different keys). Each key grants access permission to different people or groups. Finer-grained access control at the column level can be achieved by controlling the permissions of each key. When the Parquet reader parses the file footer, the crypto metadata defined in the format will indicate the Parquet library from which to get the key first before reading the data. If the user doesn’t have permission on the key, the ‘access denied’ exception will be received and the user’s query will fail. In some cases, the user can have a masked value like ‘null’. In other words, users cannot read data without having permission on the key. So the finer-grained access control is realized by controlling the permissions on the key.

Data retention, such as deleting certain categories of data after X days, can be realized by having retention policies on the keys. When a key is deleted, the data encrypted by that key becomes garbage. This approach can avoid operating on column data directly, which is usually a cumbersome operation.

System Architecture

The encryption system includes 3 layers: metadata & tagging, data & encryption, and key & policy. Their interactions, the flow of data, and the encryption controlling path are illustrated in the system architecture in Figure 1:

Entity Interactions and Data Flows

In the upper layer—metadata & tagging—there exist the ingestion and ETL (Extract, Translate, and Load) megastores. They are used by ingestion pipeline jobs and ETL translation jobs, respectively. The metadata defines the name, type, nullability, and description of each dataset (table) at the field (column) level. The metadata tagging entity adds the field privacy property that is used to indicate whether or not that field will be encrypted, and if encrypted what key will be used. The metadata is put together into a metastore. We use ingestion metastore with Apache Avro™’s schema format for ingestion pipelines, and use Hive metastore for the ETL jobs.

The middle layer shows how data is ingested from transactional upstream business storage (e.g., RDBMS DBs, Key-Val DBs via Kafka message system) and is stored in the file storage system in the Apache Parquet™ format. The data is encrypted at finer grains that are indicated by the tagging in the upper layer. The encryption is performed inside the ingestion pipeline jobs and the ETL jobs so that the data is encrypted before sending to air (in-transit) and in storage (at-rest). This is more advantageous than storage-only encryption. The ETL jobs transform the ingested data into tables by flattening the columns or different models of the tables. The transformed tables will also be encrypted if the source table is encrypted.

The bottom layer is the KMS and its associate policies. The keys are stored in the key store of KMS and its associate policy determines which group of people can access a column key to decrypt the data. The access control of columns is implemented in the policies of the keys. The privacy retention and deletion rules are also done via key retention and deletion.

The flow of the tagging in metadata controls finer-grained encryption is as below:

- A dataset is tagged at field level to indicate if that field will be encrypted or not, and which key is to be used if encrypted. The tagging information is stored in the ingestion metastore.

- The ingestion metastore has all the metadata, including the tagging information that is needed in the ingestion pipeline jobs. When a job ingests a dataset from upstream, the relative metadata is pulled from the ingestion metastore into the jobs.

- The dataset is written to the file storage system. If the metadata tagging indicates encryption is needed, the ingestion job will encrypt the data before sending it to the file storage system.

- The metadata for the ingested dataset is also forwarded to ETL metastore, which is used by ETL jobs and the queries. They need that metadata information when reading that dataset.

- The ETL metadata is pulled when an ETL job transforms the data into a new dataset (table). Again, the tagging information is used to control the encryption as discussed above.

- The transformed data is written back to file storage.

Schema-Driven Encryption

To activate the encryption functionalities in Parquet™, it needs to modify Parquet™ applications (e.g., Spark, Hive, Presto, Hudi, and many others) with changes like analyzing crypto properties, handing the KMS interaction, building encryption properties of the parameter, error case handling, and several other helper methods, and then call the Parquet™ API with a newly added parameter: FileEncryptionProperties. To avoid these changes to Parquet™ applications, Parquet-1817 defined an interface factory, so that a plugin can be implemented to wrap the details above into the plugin. This plugin can be delivered as a library so that it can be included in different applications just by adding the classpath. By doing it this way, we can avoid making changes in each individual application’s code. In the following section, we also call this plugin crypto properties & key retriever or a crypto retriever interleaved.

Now the question is how does the crypto retriever know which column is to be encrypted by which key. That information is stored in the tagging storage system. The easiest way is to let the plugin call tagging storage to get the current dataset’s tagging information directly. The problem is that by doing it this way, we are adding the tagging storage as a dependency to Parquet™ applications like Spark, Hive, and Presto, which usually run on large compute clusters like Yarn or Peloton, or Presto clusters. Sometimes even one query job could send millions of RPC call requests in a short period of time. This would create a challenge for tagging storage systems and add reliability issues to the Parquet™ applications.

We believe it can be done better by eliminating those massive RPC calls. The idea is to propagate the tagging information to the schema storage (e.g. HMS in the offline stage). The Parquet™ applications usually already have the dependency on schema storage, so no extra RPC calls are needed. Once the schema has the tagging information, the Parquet™ library running in the application can parse it and build up the FileEncryptionProperties that are needed by Parquet™ to know which columns should be encrypted by which keys and several other pieces of information.

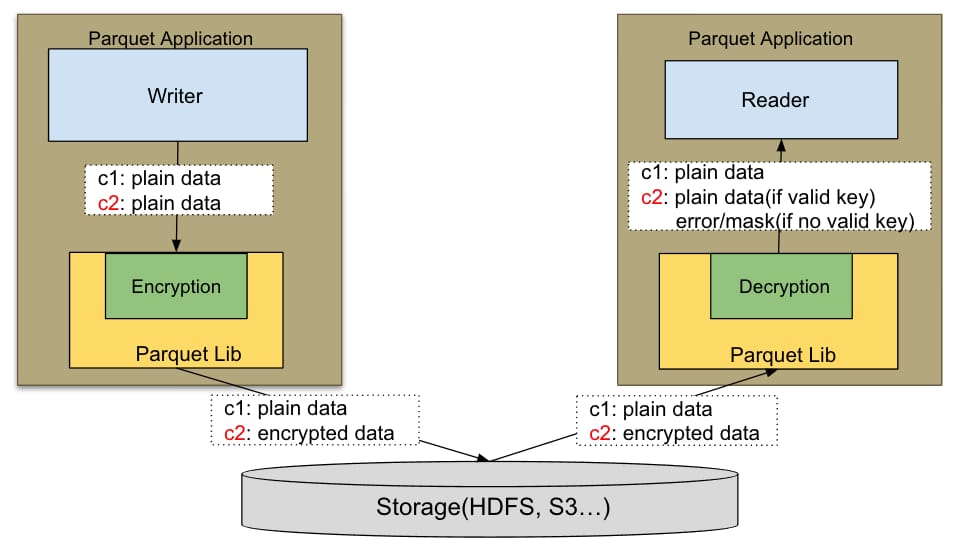

The schema-controlled Parquet™ encryption is described above in Figure 2. The left side explains how the encryption happens in the writing path and the right side is the reading and decryption path. In the sample example, there are only 2 columns (c1, c2). C2 is defined as a sensitive column while c1 is not. After the Parquet™ encryption, c2 is encrypted before being sent to storage, which could be HDFS or cloud storage like S3, GCS, Azure Blob, etc. On the reading path, the crypto metadata is stored in each file (format) and the Parquet™ library uses that to determine what key to be retrieved to decrypt the data. The KMS client is wrapped up with the same plugin. If the user has permission on the key, then the data is decrypted to plaintext.

The Schema Store includes the propagated dataset tagging information. As mentioned earlier, we use ingestion metastore with Avro’s schema format for ingestion pipelines and use Hive metastore for the ETL jobs. The Parquet™ writer generally needs to implement the WriteSupport interface. For example, Spark implemented ParquetWriteSupport, which analyzes the schema and converts it from Spark to Parquet™. This is existing behavior before adding the encryption feature. With the schema-controlled encryption, we can just extend the WriteSupport by adding the parser of tagging information and attaching them to the Parquet™ schema. The crypto retriever will consume the information and use it to determine what keys to be used for encryption. The wrapped KMS client inside the crypto retriever will get the key from KMS.

Figure 3 below shows how the tag information in the schema controls the encryption in Parquet™. With this approach, as soon as datasets are tagged or the taggings are updated, the ingestion pipeline will pick up the latest tagging and update encryption accordingly. This feature is called auto-onboarding.

Performance Benchmarking

The Parquet™ community has done an overhead evaluation on Parquet™ encryption. More details about the performance can be found in the blog published by Gidon Gershinsky and Tomer Solomon. Overall, there is ~30% overhead with GCM mode on Java 8. With Java 11, the overhead is reduced to ~3%. CTR mode is 3x faster than GCM in Java 8.

The overhead evaluation from the Parquet™ community has covered variations like Java 8 vs. Java 11, GCM vs. CTR, etc., so we don’t duplicate the iteration work for those variants. But that evolution was mainly focused on the throughput of encryption itself. Our evaluation focused more on the end-end scenarios. In reality, there are several other variables:

- File read or write time are not the only factors contributing to the duration of a user’s query or ETL job, so the number in the blog is far different from the real user scenario in terms of overhead per user’s query or ETL job.

- Not all columns need to be encrypted in most of the cases. The encryption overhead will be reduced when columns don’t need encryption.

- Encryption key operation time should also be accounted for in the overall duration, although that time can be on the millisecond level and might only change the final result in a very subtle way.

Our performance evaluation was performed on an end-end user query. We developed Spark jobs that encrypted 60% of columns in a table, which is usually more than the needed percentage of columns to be encrypted. On the decryption side, the Spark job read the tables returned with the count. The overhead is evaluated as ‘added time’ vs. the overall duration of Spark jobs, which we think is an evaluation closer to a real user scenario.

One challenge of the benchmarking jobs is that the storage latency for reading or writing files is not fixed. When comparing jobs with and without encryption, sometimes we found jobs with encryption can run faster than without encryption. This is mainly contributed by the storage reading and writing latency. To overcome this uncertainty factor, we decided to change the Parquet™ code to count the time added to the overall duration by encryption for each run. The other overhead is the KMS operation time, as discussed above. We also add that time duration to overhead. We ran the jobs multiple times and calculated the average. The average overhead is calculated as below for reading and writing:

Write overhead:

![]()

Read overhead:

![]()

In our evaluation, we chose Java 8 and CTR mode and encrypt 60% of columns. The write overhead is 5.7% and the read overhead is 3.7%. Two things need to be pointed out: 1) 60% of encrypted columns is generally more than the needed percentage of columns to be encrypted in reality, 2) a real user’s query or ETL has a lot of other tasks besides just reading or writing files (e.g., table joins, data shuffling) that are far more time-consuming. In our evaluation, those expensive tasks are not included in the job. Considering these 2 factors, the overhead of reading and writing can be further reduced. In a real scenario, we don’t see encryption or decryption overhead as concerns.

Take Away

- Finer-grained access control works better to more concisely protect data, and Parquet™ column encryption can be used to implement access control on keys. One of the ways to implement data retention is via key operations for column encryption.

- Controlling Parquet™ column encryption can be done in different ways and using schema to control it will make the solution neat without introducing extra RPC calls as overhead. That also makes a tag-driven access policy possible.

- Auto-onboarding is important when there are a huge number of tables to be onboarded. It not only saves manual effort but also can be easily built up as a system to monitor the data.

- The data lake usually has a large amount of data. Encryption needs to translate data on a large scale and that would need a high-throughput encryption tool. The innovations in Parquet™ have been merged to open source and will be released in Parquet™ 1.13.0. You can also port the change and build it to use if you cannot wait for the release.

- The benchmarking of encryption overhead shows minimal impact and it is generally not a concern for the added latency.

Conclusion

In big data, fine-grained access control is needed and it can be implemented in different ways. Our method has been to combine it with encryption and deletion requirements so that one unified solution can solve the 3 problems of data access restrictions, retention, and encryption at rest.

Parquet™ column encryption is implemented at the file format level and provides encryption at rest. We presented the challenges of applying column encryption and provided the solution of schema-controlled column encryption, which avoids excessive RPC calls and reduces system reliability concerns.

Encrypting the existing data in storage created a challenge because of the data’s scale. We introduced a high-throughput tool that can encrypt the data 20 times faster.

When onboarding tables to the encryption system, doing so manually caused some problems. Auto-onboarding not only eliminates the manual effort but also has additional benefits in building up the encryption monitoring system.

When a user doesn’t have permission to encrypt data, data masking can be used. Null-type data masking is easy to implement and less error-prone.

Xinli Shang

Xinli Shang is a Manager on the Uber Big Data Infra team, Apache Parquet PMC Chair, Presto Commmiter, and Uber Open Source Committee member. He is leading the Apache Parquet community and contributing to several other communities. He is also leading several initiatives on data format for storage efficiency, security, and performance. He is also passionate about tuning large-scale services for performance, throughput, and reliability.

Mohammad Islam

Mohammad Islam is a Distinguished Engineer at Uber. He currently works within the Engineering Security organization to enhance the company's security, privacy, and compliance measures. Before his current role, he co-founded Uber’s big data platform. Mohammad is the author of an O'Reilly book on Apache Oozie and serves as a Project Management Committee (PMC) member for Apache Oozie and Tez.

Pavi Subenderan

Pavi Subenderan is a Senior Software Engineer on Uber’s Data Infra team. His area of focus is on Parquet, Encryption, and Security. At Uber, he has worked on Parquet encryption and Security/Compliance for the past 3 years.

Jianchun Xu

Jianchun Xu is a Staff Software Engineer on Uber's Data Infra team. He mainly works on big data infra and data security. He also has extensive experience in service deployment platforms, developer tools, and web/JavaScript engines.

Posted by Xinli Shang, Mohammad Islam, Pavi Subenderan, Jianchun Xu